Introduction

Numerous contemporary data processing frameworks, such as Apache Spark, BigQuery, and Snowflake, effectively conceal their distributed nature. Nonetheless, it's important to recognize that the seamless processing of terabytes of data within a few seconds is made possible by the collaborative effort of numerous cluster nodes. These servers work in parallel, occasionally necessitating the exchange of portions of an entire dataset between them. This operation is commonly referred to as a "data shuffle". When optimising a Big Data task, the main point of developer’s focus is often reducing the frequency of shuffling, which is a resource-intensive process involving extensive network communication. In this discussion, we aim to illustrate how you can structure your BigQuery job to minimise shuffling.

The story behind the scenes

Behind the scenes lies a hidden story - now is the time to unveil it. Our mission was to enhance the efficiency of a task responsible for preparing a dataset intended for training a machine learning model. Initially, this job was quite straightforward, as it involved merging only 20 features generated by 20 distinct pipelines. Each table shared a comparable structure, comprised of only three columns:

- item_id - id of observed item

- dt - date of an observation

- value - value of an observation

Our target was joining all these features into one large table with the following schema:

- item_id

- dt

- value_1

- value_2

- …

The main challenge we faced with this task was its scale. Each day, we accumulated around 0.5 terabytes of fresh data across these 20 feature tables. When we initially considered aggregating these features over a span of 14 days, the result was a notably large dataset.

The first attempt

In our initial approach, we proceeded straightforwardly: we took the first table and gradually incorporated features from the others one by one. A pseudocode select query representation of this process would resemble the following (see Code 1.):

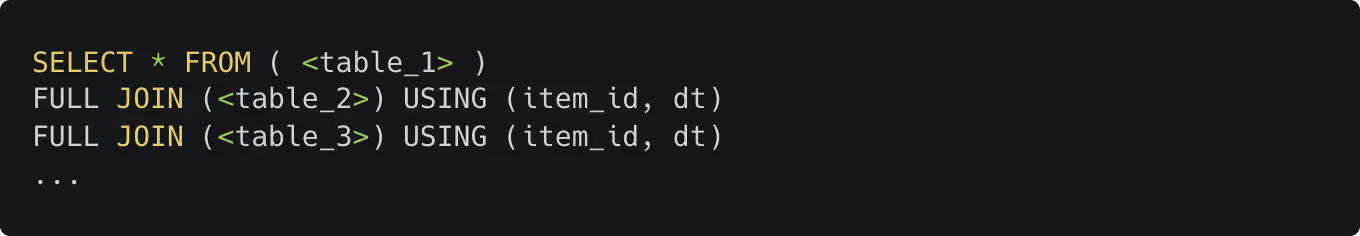

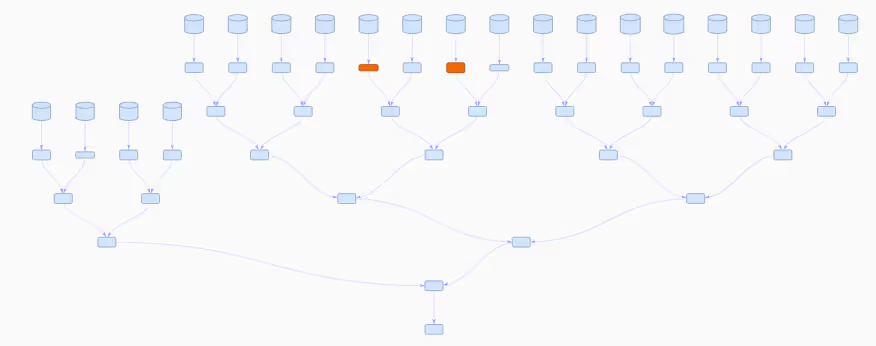

BigQuery changed this query into an execution plan that looked like a very long chain (see Image 1.).

This outcome was somewhat anticipated, yet it also held elements of surprise. It was anticipated because the structure closely mirrored our SQL code. However, SQL operates as a declarative language, not an imperative one. In essence, we specify the desired output rather than the step-by-step execution. Most database engines optimise query plans before execution. The execution plan produced for our query showed that BigQuery lacked an extensive query optimization module, or perhaps that specific query proved too intricate to optimise.

Problem identification and an improvement

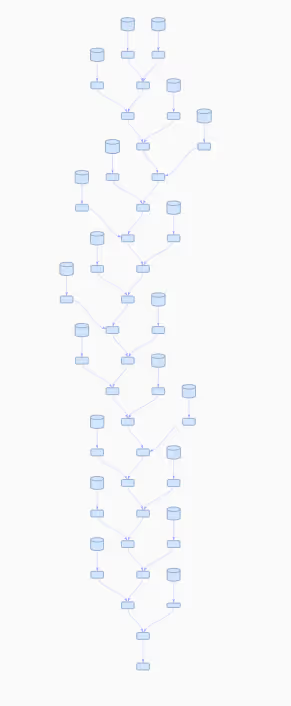

In a situation where the optimizer proved less than effective, we began to explore potential solutions ourselves. We observed that during the initial stages, the join operations were performed adequately. However, as we introduced additional features, the performance steadily declined. In the table below (see Image 2.), we illustrate the disparity between the initial and final join operations. The `slots_ms` metric is worth noting, as it reflects the overall CPU time expended during the processing of a particular stage.

The contrast is substantial, with roughly 610 slot seconds in the initial join, compared to around 37,000 slot seconds in the concluding join. It was anticipated that there would be an increase, as each join added an additional feature. Nevertheless, the magnitude of the escalation was quite surprising.

The primary challenge of our query can be illustrated as constructing a snowman using a single enormous snowball. It's straightforward to initiate a small snowball, but as we progress, moving the snowball becomes increasingly difficult. What if, instead, we began with numerous small snowballs and merged them cleverly? That was our concept - we reimplemented the joining process in a divide and conquer manner. At the outset, we firstly paired features – Table 1 with Table 2, Table 3 with Table 4, and so on. Afterwards, we combined the outcomes of the initial pairings, and in the following step, we joined pairs of datasets from the preceding stage, and so on. Ultimately, we arrived at a solitary dataset, which was our desired result.

A crucial point here is that we utilized the commutative property of a FULL JOIN. It doesn't matter whether we join Table A and Table B or the other way around – B and A. In either case, the result remains identical. It's important to highlight that our method would not function in the same way with a LEFT JOIN, for instance.

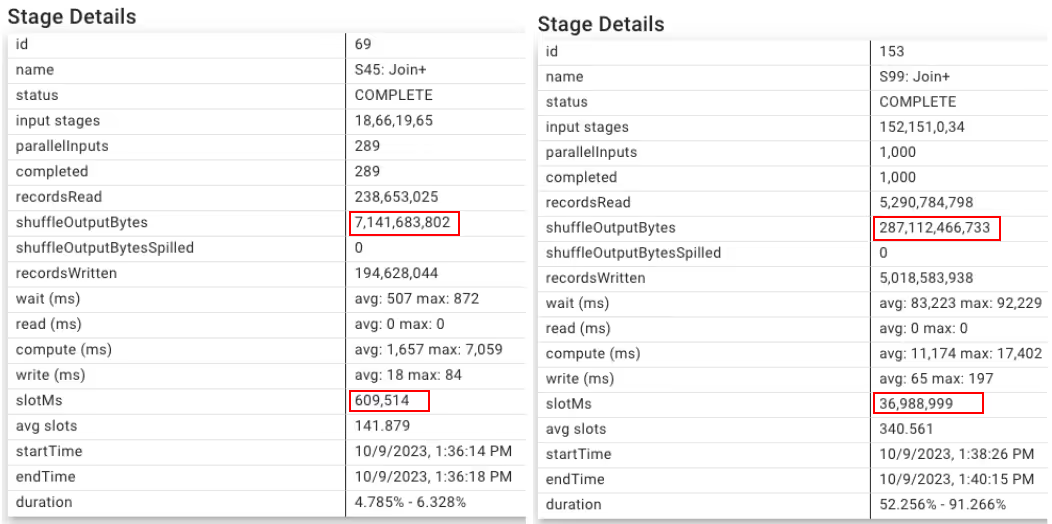

Let’s come back to our query. We built it from blocks which look like this (see Code 2.):

That adds a lot of boilerplate code, but it turns out that it changes the query plan too!

The new plan appears significantly better (see Image 3.)! In this updated approach, each join operation merges two datasets of roughly equivalent sizes. This prevents the danger of building one big dataset that then experiences multiple rounds of shuffle stages. We first combine smaller datasets before integrating them into the larger one. Moreover, all data from different tables now passes through a maximum of just five stages. These modifications are expected to decrease the volume of bytes shuffled during the job. Lastly, the entire job plan now fits on a single screen!

Performance comparison

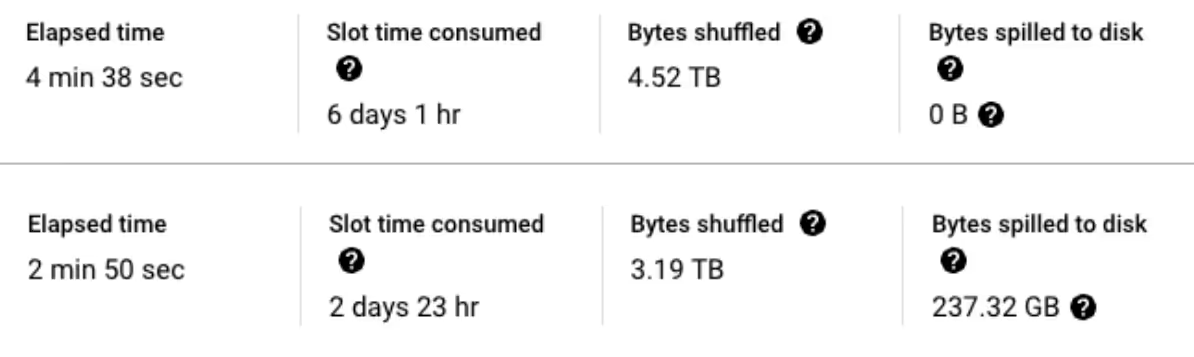

Let’s compare execution statistics when we build joined features for one day (see Image 4.).

The crucial metric to consider is the `totalSlotsMs`. Our latest solution is roughly twice as fast as the previous one. In terms of elapsed time, we've managed to reduce it by one-third. We've observed a comparable shift in the shuffle bytes metric. However, it's worth noting that after changing the query design, we began to experience disk spills. This is typically an indication of suboptimal performance. Even though it's an improvement over the initial solution. There is still room for further enhancement!

Conclusion

The structure of a SQL code is important in BigQuery. We managed to rewrite the query and improve its execution plan by doing so. Second note, shuffle matters - as always in a distributed framework. Last but not least, there is always room for improvement, even for the simplest queries. Our team of professionals specializes in optimizing BigQuery performance. If your company is facing challenges, we're the right experts to help.

Happy optimizations!

Contact us to boost your BigQuery efficiency today!

.avif)

.avif)