Introduction to Machine Learning Operations on Azure

In today's rapidly evolving landscape, the intersection of machine learning practices and the capabilities of Microsoft's Azure platform has given rise to an approach known as MLOps on Azure. Short for Machine Learning Operations, MLOps represents a pivotal shift in how organizations manage machine learning projects effectively. Why do you need MLOps? At its core, it brings together the collaborative efforts of data scientists, developers, and operations teams to streamline the end-to-end ML lifecycle. The detailed explanation about the use of MLOps principles can be found in my previous blog post. This article serves as a gateway to understanding the symbiotic relationship between building MLOps platform and Azure. As we delve deeper, you'll discover how Azure's rich array of tools and services provides an optimal foundation for implementing MLOps tools seamlessly. Buckle up, as we embark on a journey to explore the fusion of cutting-edge machine learning practices with the powerful infrastructure of Azure.

Integrated machine learning stack in Azure

Embarking on your journey into MLOps process within the Azure universe begins with setting up a robust environment tailored to your machine learning systems. This step is crucial in laying a solid foundation for successful MLOps implementation. Assuming you already have your Azure subscription and resource group set up, in a standard approach you would need to manually create every needed resource like a virtual machine, storage account for your data and models, a tool to orchestrate your data pipelines and training pipelines, application insights for monitoring etc. and connect them together to create a machine learning platform. Microsoft Azure provides a way to simplify this process, in a form of Azure Machine Learning workspace. It abstracts the underlying infrastructure needed for machine learning tasks, like model training and model deployment process. You can provision compute resources directly within the workspace eliminating the need to set up separate virtual machines. Other necessary resources are created automatically along with the creation of the workspace.

By leveraging the capabilities of a single Azure ML workspace instance, you can centralize your MLOps configurations, streamline your workflow, and manage all aspects of your ML system operations within a cohesive environment. This approach simplifies setup, reduces complexity, and enhances collaboration across data analysis, data science teams, developers, and operations teams.

Standardize machine learning workflows using Azure DevOps

Azure DevOps, a powerful suite of development tools, plays a pivotal role in ensuring efficient version control within your machine learning projects. In the realm of MLOps, maintaining the integrity of code and models across iterations is paramount. Azure DevOps provides a comprehensive version control system that empowers data teams to collaborate seamlessly, track changes, and revert to previous states when needed. With Git repositories at its core, Azure DevOps enables data scientists and developers to commit code changes, manage branches, and merge contributions effortlessly. Whether you're fine-tuning algorithms or enhancing model features, Azure DevOps safeguards against conflicts and facilitates a systematic approach to version management.

Azure DevOps and Azure ML workspace collaborate to provide a comprehensive CI environment for machine learning projects. Azure ML workspace allows you to define experiments as code or using a built-in visual designer. Pipelines can automate running these experiments with new code changes, ensuring consistent and reproducible results. Azure DevOps supports the creation of continuous integration and continuous deployment (CI/CD) pipelines, crucial in managing the entire machine learning lifecycle. This capability enables data teams to build MLOps workflows such as machine learning infrastructure deployment, data collection and data transformation, pipelines to accelerate model development and model deployment process to the prediction service and also utilize model monitoring (for example to monitor model performance degradation).

In essence, Azure DevOps harmonizes the power of Git's version control with seamless integration into the machine learning lifecycle. Developers and data scientists can seamlessly integrate code changes, automated testing, experimentation, and deployment through well-defined pipelines. This synergy fosters collaboration, empowers experimentation, and fortifies the foundation of MLOps by enabling teams to traverse the intricate landscape of versioning with precision and efficiency.

Train, deploy and manage models in Azure

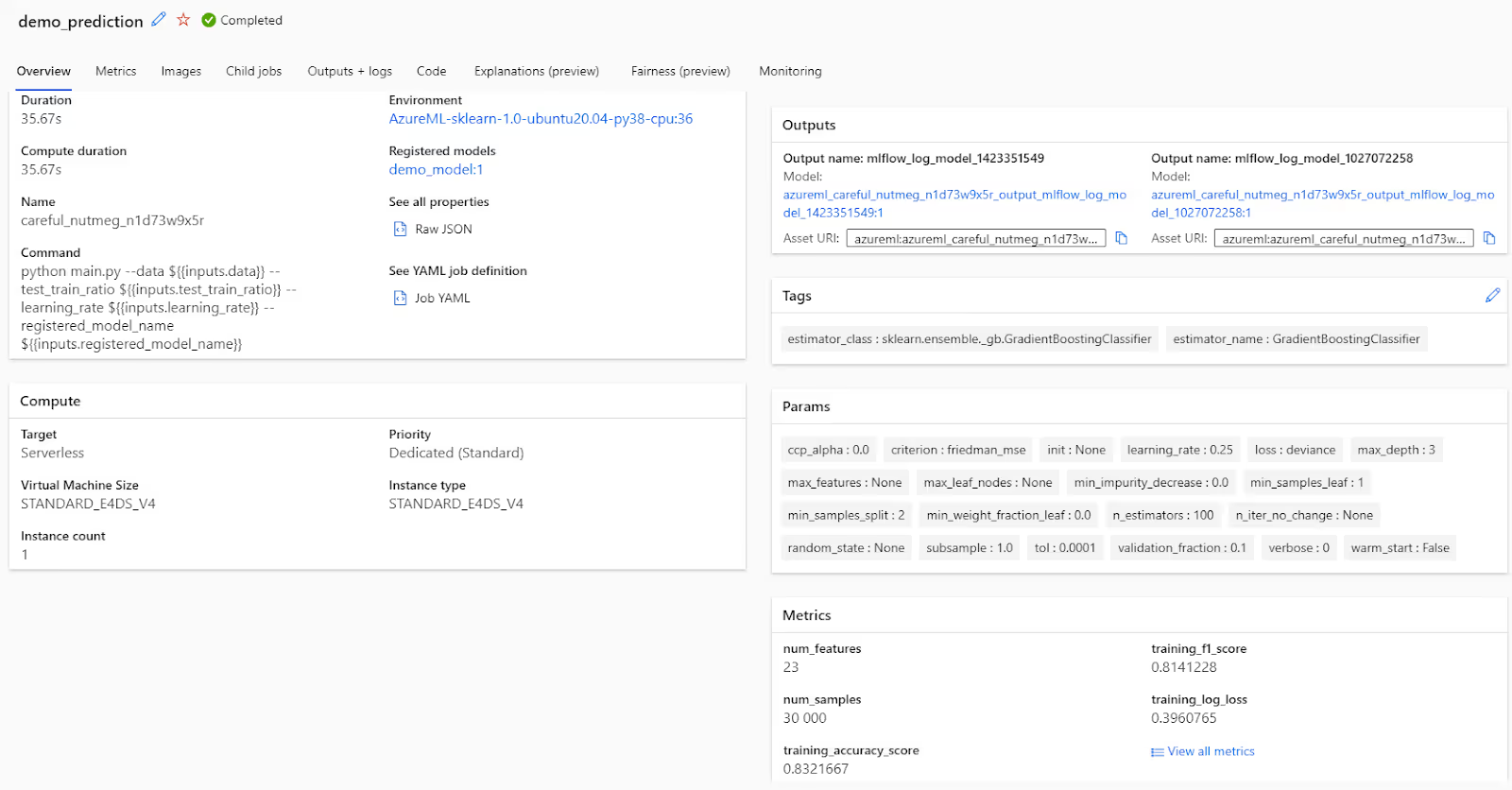

Automating the training and deployment of machine learning models is the heart of MLOps practices and machine learning model lifecycle, and Azure Machine Learning provides a comprehensive suite of tools to facilitate the automation and monitoring of this crucial phase. This aligns perfectly with the fundamental principle of reproducibility in MLOps. By defining custom environments and Python scripts along with pipeline automation, you can orchestrate data pre-processing, model creation and optimization steps using respectively data engineering pipelines and training pipelines. Azure ML's experiment tracking, acting also as a first step of newly trained model validation, captures essential details, allowing you to effortlessly trace the history of each training run.

Continuous training in Azure Machine Learning can also be achieved by utilizing Azure Pipelines to orchestrate the retraining process. Through this approach, you can trigger retraining of your machine learning models based on predefined schedules or events (such as the ones generated by model drift monitoring), ensuring that models remain up-to-date with the latest input data. It allows you to automate the entire ML pipelines, from ingestion from data sources and feature engineering to model retraining and validation, enabling a seamless and continuous training cycle.

Once the optimal model performance is achieved, you can utilize model registry, whose main purpose is to track model versions. With that, transitioning to deployment to model prediction service is seamless. Azure ML encourages automated model containerization, packaging your trained model within a Docker image for consistency. The model deployment step consists of saving the image on the connected Azure Container Registry and deploying it on Azure Kubernetes Service (AKS) or another endpoint for scalable and reliable execution. The end-to-end automation of training and deployment within Azure ML ensures that your current production model is not only developed effectively but also deployed in a manner that's efficient, consistent, and ready to cater to real-world demands.

Azure Machine Learning support for automated testing

In the realm of machine learning, automated testing stands as a beacon of quality assurance, ensuring that models perform reliably in various scenarios. Automated testing begins with the creation of test datasets that represent real-world scenarios. These datasets should cover a range of input variations and edge cases to comprehensively validate model behavior. Next, the evaluation metrics, that indicate the desired performance of your model, are defined. This could include accuracy, precision, recall, F1-score, and more, depending on your model's objectives. End-to-end testing involves running the entire model pipeline using test data and verifying the final predictions. This ensures that all components interact correctly and produce the expected outcomes. You can include automated testing steps within your CI/CD pipeline to ensure that new model versions are thoroughly tested before deployment.

Automated testing identifies bugs and discrepancies in model predictions. Detecting issues early prevents faulty models from being deployed, maintaining the quality of deployed solutions. By validating model predictions against known test cases, automated testing enhances model transparency. You can understand why certain predictions were made and identify cases where models might fail. Automated tests serve as a clear communication channel between data scientists, developers, and stakeholders, according to MLOps collaboration principle. Models that pass automated tests are more likely to perform well in production. This leads to robust deployments and reduces the risk of operational disruptions.

How to monitor MLOps platform on Azure?

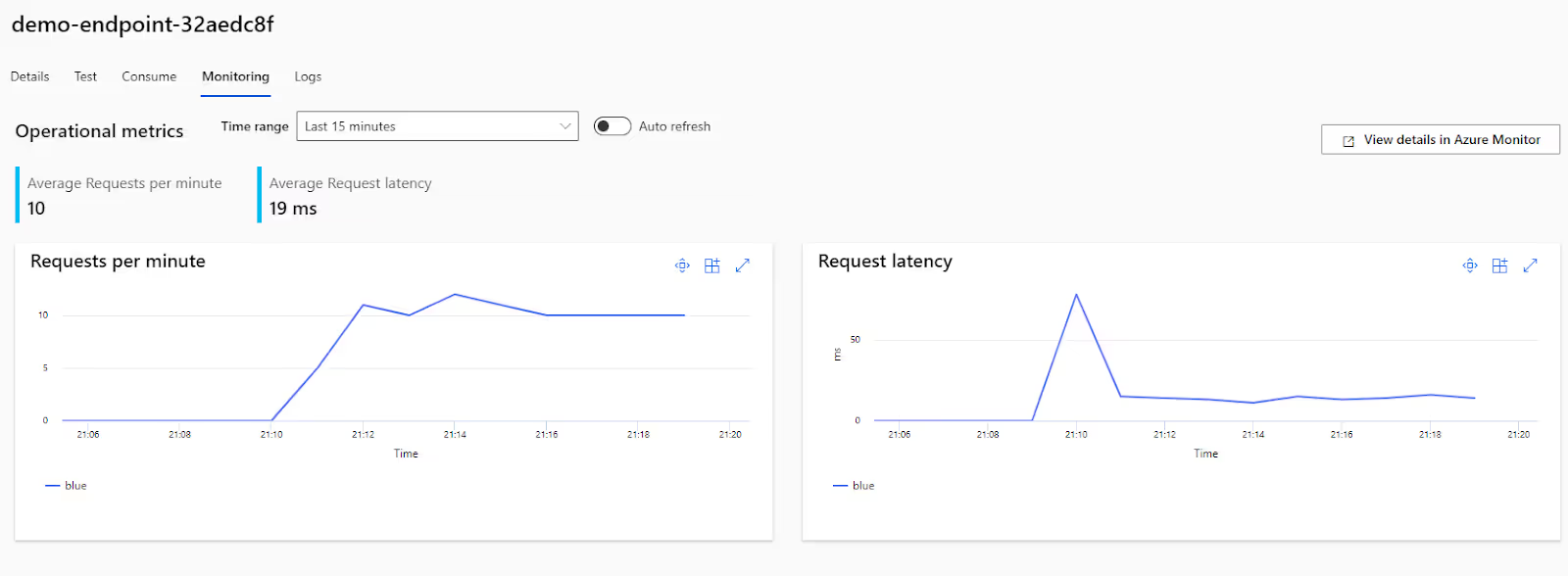

In the realm of MLOps, monitoring and logging form the bedrock of proactive model management. Azure Monitor, an integral part of Azure, empowers data teams to gain real-time insights into the performance and health of deployed machine learning models. By setting up custom metrics, alerts, and dashboards, you can stay informed about critical aspects such as prediction accuracy, resource utilization, and latency. Azure Application Insights further augments this process by providing granular visibility into the end-to-end user experience, enabling you to detect bottlenecks and optimize model performance.

By leveraging the capabilities of Azure Monitor and Azure Application Insights, Azure ML workspace empowers you to maintain a vigilant watch over your deployed machine learning models. This proactive monitoring approach helps you detect and address performance issues promptly, ensuring that your models continue to deliver accurate and reliable results to end-users.

Data Management and Governance

Effective data management and governance are fundamental pillars of MLOps. Azure ML workspace keeps track of data lineage, allowing you to trace the origin and transformations applied to each dataset. Transparency is crucial for maintaining data quality, understanding how data has been processed, and ensuring reproducibility. The workspace provides built-in version control for datasets. This means you can create and track different versions of datasets as they evolve over time. The versioning ensures that you always have access to historical data for auditing and analysis. It also enables data profiling, allowing you to assess the quality of your datasets. You can identify missing values, outliers, and other data anomalies that might impact the performance of your machine learning models. Azure ML workspace supports collaborative data governance, allowing data scientists, data engineers, and business stakeholders to collaborate on data preparation, quality assurance, and compliance efforts.

Azure Machine Learning workspace can also integrate with services such as Azure Data Factory (a cloud-based data integration service that enables you to create, schedule, and manage data pipelines) or Microsoft Purview (data governance solution that helps you discover, classify, and manage your data assets). By leveraging the capabilities of these two services, Azure ML workspace empowers you to establish a robust data management and governance framework. This framework ensures that your machine learning projects are fueled by high-quality, compliant, and well-organized data, leading to more accurate and reliable model outcomes.

Scaling Strategies and MLOps resources management on Azure

Resource management within the confines of Azure Machine Learning workspace emerges as a pivotal strategy for optimizing MLOps efficiency. The workspace itself becomes the control center, offering an array of tools to meticulously manage the resources powering your machine learning endeavors. Through the utilization of Azure Resource Manager templates, you can precisely define and automate the allocation of resources, ensuring consistency across deployments. In tandem, the intrinsic capabilities of Azure ML workspace empower you to dynamically tailor resource allocation to changing workloads. As models are trained, deployed, and data processed, the workspace adeptly orchestrates resource scaling to match demand. This harmonious integration of resource management within the workspace equips your MLOps pipeline with the agility and precision required to navigate the diverse terrains of machine learning, all while upholding peak efficiency.

Ensuring Security and Compliance in MLOps with Azure

Security and compliance stand as steadfast sentinels guarding against potential risks and ensuring the ethical use of AI technologies. Azure Machine Learning workspace embraces this imperative, offering a robust fortress of security features that safeguard both data and models. Through role-based access control (RBAC), Azure ML enables fine-grained permissions, granting authorized users tailored levels of access. Encryption at rest and in transit ensures that data remains shielded from unauthorized access during storage and transmission. Azure ML workspace aligns with compliance standards such as GDPR and HIPAA, providing the framework to navigate intricate regulatory landscapes. This symbiotic integration of security and compliance measures equips your MLOps endeavors within Azure ML with a fortified foundation—assuring not only the integrity of your machine learning solutions but also adherence to stringent ethical standards.

Feedback Loops: Enhancing Models in Azure Machine Learning

The feedback loop is a crucial component of MLOps that fosters continuous improvement in machine learning models. It involves the collection of real-world data and user feedback post-deployment, which is then used to retrain and enhance the models. This iterative process ensures that models evolve to meet changing needs and maintain high quality. Feedback provides valuable insights into how models perform in diverse real-world scenarios. By incorporating this feedback into retraining, models can adapt to dynamic and evolving environments. User feedback often highlights limitations and edge cases where the model may not perform optimally. The feedback loop helps identify and rectify these anomalies, improving overall prediction accuracy. The iterative nature of the feedback loop leads to a continuous cycle of model improvement. As new data and feedback are collected, models are iteratively fine-tuned, resulting in enhanced accuracy and relevance. Models that consistently improve based on user feedback lead to higher user satisfaction and adoption rates. Users gain confidence in the model's predictions, leading to increased trust and reliance on the system. In conclusion, the feedback loop is an integral part of MLOps that ensures machine learning models remain relevant, accurate, and effective. By incorporating real-world data and user feedback, models continually adapt and improve, ultimately enhancing their quality and value in various application scenarios.

Summary

In the dynamic landscape of modern machine learning operations, harnessing the capabilities of Azure Machine Learning emerges as a strategic advantage. This comprehensive platform offers a multitude of tools, seamlessly woven together to streamline every facet of the machine learning lifecycle. From data preparation and experimentation to model training, deployment, and ongoing monitoring, Azure ML workspace empowers organizations to navigate the complexities of MLOps with finesse. By providing an integrated environment for collaboration, version control, and automated testing, Azure ML workspace fosters efficient teamwork and quality assurance. The integration with Azure DevOps amplifies the synergy between development and operations, establishing a robust framework for continuous integration and delivery. Furthermore, the incorporation of Azure's data management, governance, and security features ensures the responsible and compliant deployment of machine learning solutions. Embracing the feedback loop within Azure ML workspace completes the cycle, driving iterative enhancements that elevate model quality and relevance over time. In essence, Azure ML workspace stands as a guiding beacon in the MLOps journey, equipping organizations with the tools they need to drive innovation, efficiency, and impactful outcomes in the realm of machine learning.

.png)

.png)